Getting the iPad to Pro

The new iPad Pro is a computer from the future, with software from yesterday

I love my iPad.

I am conflicted by my iPad.

I am bullish on the A-chips that power iPads as the future of Apple’s computers.

You know the drill, they’re here: Like clockwork, the sleek, new iPad Pros are here. They’re a bit lighter, with nicer screens, and much faster processors.

In fact, these new iPad Pros are so high-powered that they benchmark close to top-of-the-line MacBook Pro Intel-powered laptops.

Apple — Apple! — itself is hardcore subtweeting Intel via Ars Technica interviews.

Because: This is magic — getting this kind of power into a device so devoid of mass and heat.

As Gruber points out, the power-per-buck is unmatched in Apple’s history. I’d say the power-per-cubic-millimeter is even more impressive.

Super computers shoved into thin space beneath extra-tough glass. Digi-Slates. Actual Gibsonian, Stephensonian objects from the future. Throw them in your backpack, a few hundred grams, big screen, cellular high-speed connectivity, all-day battery life. Uhm, yes, please.

The new iPads are arguably the most beautiful (or at least beautifully minimal) compute devices ever made, and Apple is unambiguously positioning the iPad as the computer you should want.

“iPad Pro does what a computer does but in more intuitive ways,” they say. But why iPad? you ask. “Because it works like a computer. And in ways most computers can’t,” they follow up, cryptically.

As if to hammer this computer idea home, these new iPads even have a USB Type-C port and connect to — in a move that seems to rally against the very Newtonian fundamentals of touch-screen tablets — external monitors.

So they’re serious workhorses now, right?

I’ve used iPads for eight years. Ever since the incredibly clunky — but oddly enthralling — version one.1 Mostly, it’s been confusing. Just what the heck are these things for? They’re definitely excellent for hypnotizing small children at restaurants. But since 2017, with the release of iOS 11 and basic multitasking, you could maybe — just maybe — earnestly use them as potential laptop replacements.

These new iPads may be gorgeous pieces of kit, but the iPad Pros of 2017 were also beautiful machines — svelte and overpowered. In fact, the iPad Pro hardware, engineering, and silicon teams are probably the most impressive units at Apple of recent years. The problem is, almost none of the usability or productivity issues with iPads are hardware issues.

Which is to say: For years now, the iPad’s shortcomings are all in iOS.

On a gut level, today’s iPad hardware feels about two or three years ahead of its software. Which is unfortunate, but not unfixable.

Let’s assume — as all the marketing seems to imply — that Apple wants us to treat these machines as primary computers. And assume we’re professional computer folk, who do complicated computer things.2

Having used the heck out of iPads these past few years, I believe there are two big software flaws that both make iOS great, and keep it from succeeding as a “pro” device:3

- iOS is primarily designed for — and overly dependent on — single-context computing

- Access to lower level (i.e., a file-like system) components is necessary for professional edge-tasks

And one big general flaw that keeps it from being superb:

- Many software companies still don’t treat the iPad as a first class computing platform4

Let’s dig into real-world examples of where these issues present problems.

#Writing & all the contexts

What do I do on my computers? I write, edit photographs, design books, record and edit audio, send emails, and research online. I also do a bit of web development, and so from time to time I will sit in Terminal.app, git-ing things, knocking on the sides of servers.

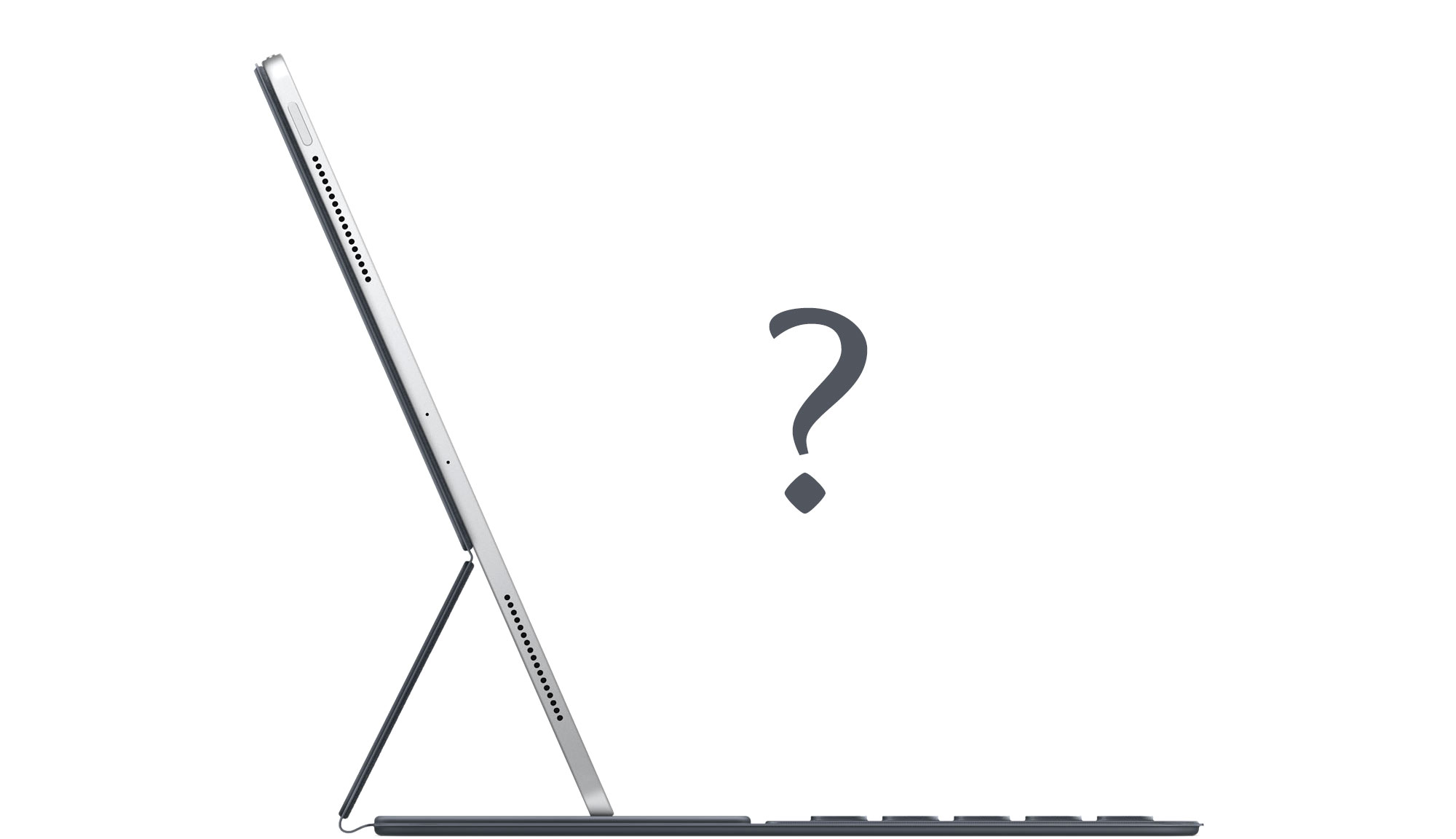

iPads do writing just fine, especially since the release of the Smart Keyboard. An iPad Pro with a Smart Keyboard feels like a nearly indestructible, grossly overpowered writing machine.

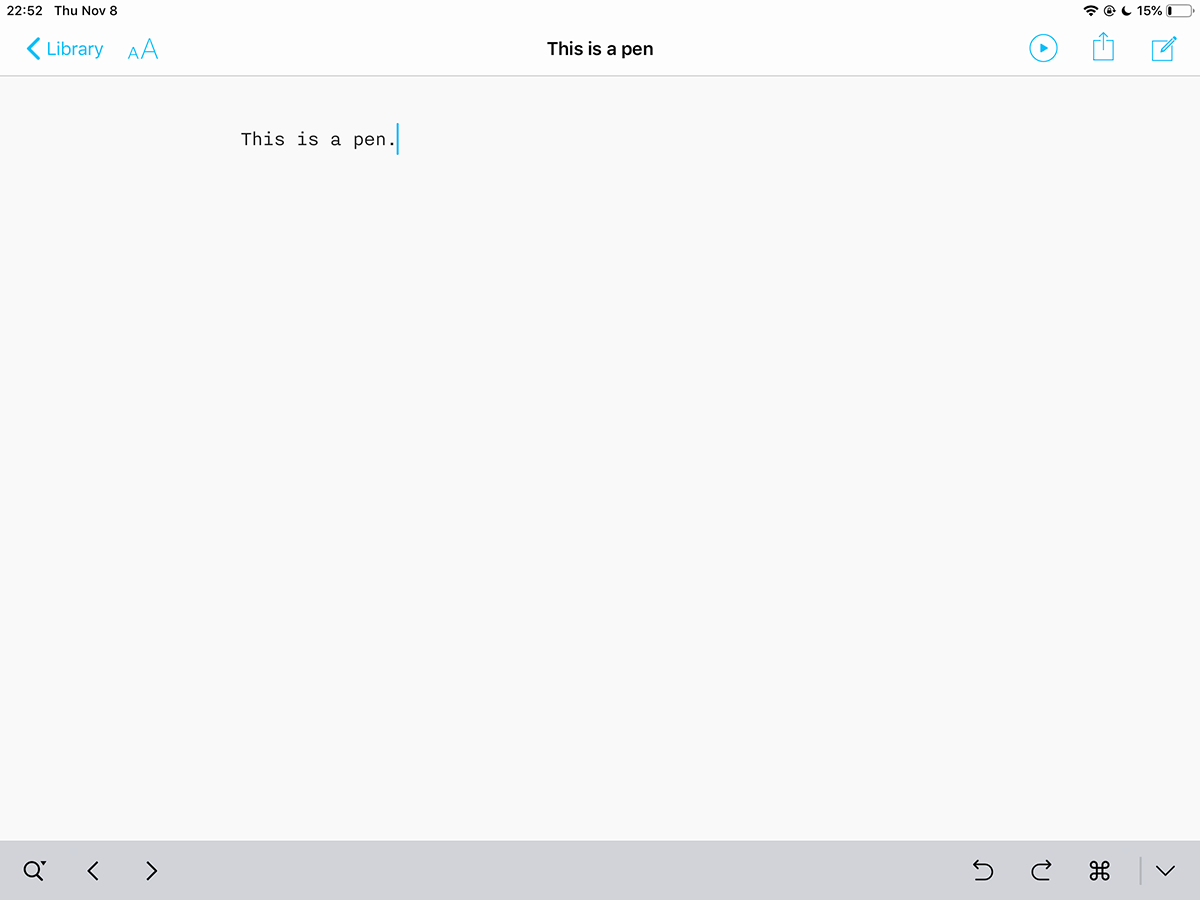

iA Writer, Scrivner, and Ulysses are all full featured, totally serviceable writing apps. You can write anything from a grocery list to a John McPhee-esque non-fiction opus on the iPad. Export as PDFs or EPUBs or .docx files. And because iOS is first and foremost designed for single-context use, the default view is full-screen focus-mode. iCloud as a syncing and storage option has matured and now works reliably. Handing off documents from iPad to iPhone to desktop is often seamless.

Good so far.

The problems begin when you need multiple contexts. For example, you can’t open two documents in the same program side-by-side, allowing you to reference one set of edits, while applying them to a new document.5 Similarly, it’s frustrating that you can’t open the same document side-by-side. This is a weird use case, but until I couldn’t do it, I didn’t realize how often I did do it on my laptop. The best solution I’ve found is to use two writing apps, copy-and-paste, and open the two apps in split-screen mode.

Daily iPad use is riddled with these sorts of kludgey solutions.

Switching contexts is also cumbersome. If you’re researching in a browser and frequently jumping back and forth between, say, (the actually quite wonderful) Notes.app and Safari, you’ll sometimes find your cursor position lost. The Notes.app document you were just editing fully occasionally resetting to the top of itself.6 For a long document, this is infuriating and makes every CMD-Tab feel dangerous. It doesn’t always happen, the behavior is unpredictable, making things worse. This interface “brittleness” makes you feel like you’re using an OS in the wrong way.

In other writing apps, the page position might remain after a CMD-Tab, but cursor position is lost. Leading to a frustrating circus of: CMD-Tab, start typing, realize nothing is happening, tap on screen, cursor inserts to wrong position, long-press on screen to get more precise input, move cursor to where it needs to be, start typing. This murders flow. It creates a cost to switching contexts that simply doesn’t exist on the macOS, and shouldn’t exist on any modern computing device.7

#Photography

Photography ushers in a whole separate class of headaches.8 Unquestionably, the iPad Pro should be the ultimate photography editing machine: A gorgeous high resolution, wide-gamut color screen, perfect multi-touch sensitivity, an A-class chip able to rip through raw files. So where does it fail?

Simple tasks like importing images are arduous. The Lighting SD card reader only imports into Photos.app. Meaning, if you want to edit in any other program (Lightroom CC, for example) you need to import once to Photos.app, then reimport to Lightroom, then go back to Photos.app and delete all the raw files so you aren’t using double the space to store the images.

As Nilay Patel astutely observes in his unflinching iPad Pro review, the best way to do Lightroom CC photography on the iPad is to first import on the desktop.

But why? Apple seems to think: Photos.app should be your main image dumping ground, and all other programs should shimmy around that. Sadly, that’s not how you work in the field, and the photography I do with my “pro” cameras is very different than that which I do with my iPhone. Mingling those two contexts is a recipe for headaches.9

On the flip side, I have nearly 30,000 photos in my iCloud library, going back eighteen years. Many of these photos were taken with point-and-shoot digital cameras, the very ones the iPhone displaced once its camera improved. To be able to scan through this archive and find old memories at a whim — while getting coffee with an old university buddy, for example — does feel like magic. But that’s more a parlor trick than a professional need.

From a professional perspective, intuitively we should be able to import images directly into any context we want — Dropbox, Lightroom, whatever app or container supports the image format being imported. The fact that we can’t treat an SD card as a storage container unto itself doesn’t just frustrate on the photo side of things, it bleeds into other pro activities.

To relentlessly hammer home the point: I was traveling recently, iPad in hand, and realized I should update my camera’s firmware (in order to enable a new Wi-Fi image transfer which I was hoping might get me around the SD import issues).

The task: Download a file from a website, put it in the root of the SD card, and then insert it back into the camera. Simple on a desktop (as long as you have the right dongle). One would think that with iOS’s recently revamped Files.app browser for all the various cloud services, that the SD card would also appear as another storage container. But it doesn’t. Alas, it’s impossible to put a file from the web in the root directory of an SD card on iOS.

You can argue that camera makers should have more seamless updates — maybe through connecting the camera to a network, checksuming the files, digitally signing, securely downloading and installing the firmware themselves. But you can also very reasonably argue that the United States should be using metric measurements. It doesn’t mean it will happen.

Even so, once your photos are finally imported, you find that programs like Lightroom CC on iOS are hamstrung in baffling ways. When editing in Lightroom CC on the desktop I make half a dozen virtual copies of good images to quickly compare different crops or edits. On iOS, the ability to make a copy of an image is inexplicably, infuriatingly, not present. You get one copy of each image per album, no more. I’m not sure why this is a limitation, but it simply highlights how iPad software is still — despite the amazing hardware — considered a second class citizen at many major software companies.

#Miscellany; usability issues

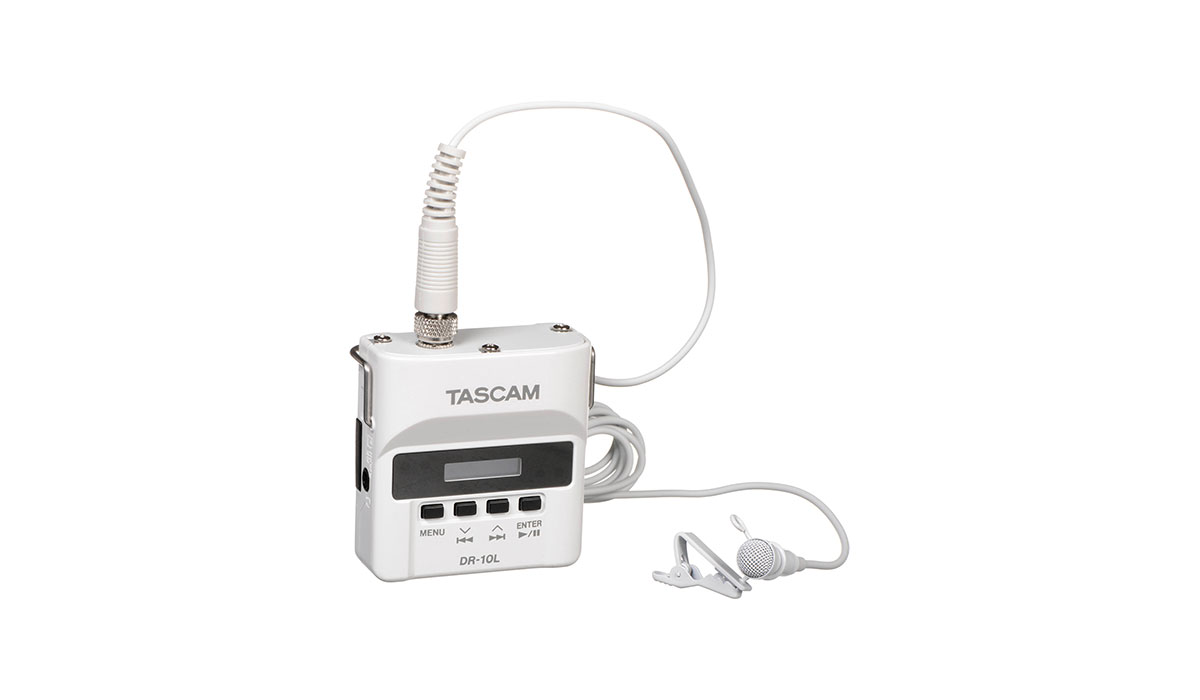

These past few months I’ve begun to produce field recordings from my walks in the mountains. Little interviews recorded in high-fidelity on impossibly small and light Tascam recorders with lavaliers. The ideal is to be able to edit the recordings in the field without having to lug around a laptop. But — as you might guess — it’s impossible to get the data off the SD card and into iOS.

Google Docs and Sheets both work on the iPad, but lack many of the features you find in them on a desktop browser. And the missing ESC key on the iPad makes for painful spreadsheet editing using current models of user interaction. (Update: A reader wrote in to inform me that CMD-. works like an ESC key on iOS and … low and behold, it does. Spreadsheets are a little less painful.)

Many apps don’t even exist for iPad. Instagram, for example, has never bothered to release an iPad version of its iPhone app. Fortunately, iPads are able to run iPhone apps in a kind of emulation mode. Unfortunately, they can only do this in portrait orientation.

Even though the iPad’s processors have long since been powerful enough to run software like InDesign, Adobe hasn’t ported it and nobody else has been incentivized to build it. But, damn, it would be nice to set type on that screen, zoomed in, kerning by hand. Though, to make a book is to work with a half-dozen contexts: Pulling text from one place, images from another, editing on charts and illustrations yet elsewhere. Exporting. Test printing. If an iPad has trouble juggling just two or three contexts, I shudder to imagine wrangling my usual book design workload onto iOS.

Server development can be tricky. Because of the way iOS is built, you never know if a program has been reset in the background. Is your network connection in the terminal still active? Impossible to tell. It took Blink two years to tackle this, but it looks like they recently figured out how to hold a session across collapsing apps. Still — as a pro user, you want app states that are reliably consistent, not apps that vibrate somewhere in between alive and rebooted.

Multitasking on an iPad requires some pretty odd finger dances. Tap and hold, drag just a bit for one mode, drag a bit farther for another. Dragging a split-screen bar to change the ratio of split requires long presses, slow drags, and what looks like full refreshes of the running apps. It’s lumbering. It’s clunky. There’s a fluidity missing from these interactions.

See the work of Mike Matas and Bret Victor for examples of how fluid multi-context interactions can be. Everything in the OS could and should be an active object. Considering the power of these machines, it should all flow like a mini Minority Report workstation.

And there are still some edge-case iPad interactions that are just beautifully bewildering: For example, if you open an app as a “floating window” (only possible to initiate if the app is visible in the dock) by dragging it over a current running app (but not too close to the edge of the screen), and then slide that floating window off the screen, the next time you tap that app in the dock, it will open as a floating window, not as a full-screen instance.

Anyway, even typing that sentence out doesn’t make much sense.

I have a near endless bag of these nits to share. For the last year I’ve kept a text file of all the walls I’ve run into using an iPad Pro as a pro machine. Is this all too pedantic? Maybe. But it’s also kind of fun. When’s the last time we’ve been able to watch a company really figure out a new OS in public?

When I run into the above usability issues, it makes me wonder two things:

- Why am I trying to do something this way? (What strange habit or unnecessary expectation am I bringing to the table?)

- How would the simplicity of iOS be subverted by allowing this new thing to happen?

Computers are nothing if not a constellation of design and engineering details that either work for or against you. They either push you forward, smoothly, an encouraging tailwind allowing you to get done the work you want to get done, or they push back, become abrasive, breaking you from flow states, causing you to have to Google even the simplest task. I lost an hour the other day trying to open an Open Office Document.10 This is bananas. iPads should be better. They’re so close. And they’re certainly powerful enough.

The iPads of today are a far cry from that oddly rounded, difficult to hold, heavy, low-ish resolution first version of the iPad released in 2010. These new iPad Pros are, from a hardware perspective (whispering with hedged hyperbole), quite possibly the most impressive, certainly most beautiful, consumer computers ever made. And so expectations should be high for what we can do with them. We should expect to do more, more easily.

I recently returned from a ten day hike during which I brought only my 2017 10.5” iPad Pro. It felt solid and reliable and I never worried about it getting wet or breaking. I love this quality of resilience in iPads. Even if the Smart Keyboard dies on me I can still type on the screen. I’ve been burned in the field by two MacBook Pros failing on me: One for the screen, the other the keyboard. So I mainly hike with just an iPad these days. Comparatively, it feels indestructible.

On my hike I was able to do clunky in-field photo imports as a backup, but I wasn’t able to backup my audio recordings. That was irksome. But I was able to type out notes in a small hut up on the top of a mountain as I interviewed a Buddhist sculptor, having carried an extremely light machine up 2,000 meters of vertical ascent, through sleet and rain, and never once did I worry about the device letting me down.

Still, when I came home and opened my MacBook Pro, it sang. macOS is clunky in its own ways — with nearly fifty years of UI ideas piled atop each other, the incumbency of older technologies making it more complex than it should be. But, damn, it sings. It responds as quickly as I can type, and if I need to hack together a solution — figure out how to open a weird file or automate a process or drag data from one space to another — my iOS mittens are off and the precision is surgical. It feels like a machine that trusts me to be an adult.

#Future Computing

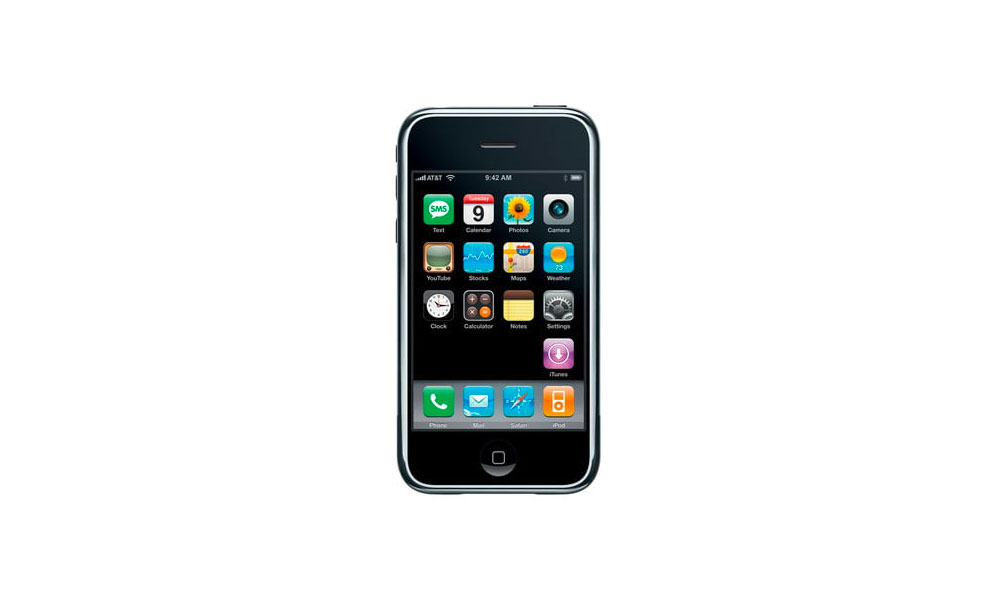

iOS began — and succeeded — as a rigorous exercise in single-context computing. The original iPhone and iOS were revolutionary — marrying simplicity and explicit interaction. Apple established a new mass-market baseline for computer interaction. On an iPhone-sized device, iOS today is still a marvel. On an iPad with an external keyboard, it’s a bit of a mess.

The ideal of computing software — an optimized and delightful bicycle for the mind — exists somewhere between the iOS and macOS of today. It needs to shed the complexities of macOS but allow for touch. Track pads, for example, feel downright nonsensical after editing photos on an iPad with the Pencil. But the interface also needs to move at the speed of the thoughts of the person using it. It needs to delight with swiftness and capability, not infuriate with plodding, niggling shortcomings. Keystrokes shouldn’t be lost between context switches. Data shouldn’t feel locked up in boxes in inaccessible corners.

Here’s my hope: With hardware as beautiful and powerful as that announced last week, companies and consumers will have to pay attention. Compared to two years ago, software on the iPad is already in a more mature place. Companies like Affinity produce professional level first-class apps for the iPad. In the next two years we’ll start to see genuine ports of other cornerstone creativity apps like Photoshop. Theoretically, in the process of porting, companies like Adobe will have an opportunity to refactor their decades old software, making it faster and better than what’s on macOS. The equivalent of a professional software palate cleanser. We’ll all win once we get over this current hump.

That’s what I want to believe.

Buy one of these new iPads. They’re seductive beyond reason. Buy it knowing well what its limitations may be. But knowing also that it will get better. That its engine is years ahead of its software. And in owning one you’ll be able to follow along as a contemporary operating system matures in real-time, beta-by-beta, before your very eyes. It’s not often we get to enjoy this.

For now, though, the iPad is still good for writing. Amazing for sketching. Reliable as a tank. Just don’t switch too quickly between apps. Don’t expect your cursor to be where you think it should be. Don’t try to do anything fancy with files. Don’t design a book on it. And don’t hope to open two documents from the same app side-by-side. Yet.

#Noted:

-

God almighty, in hindsight was that thing ever difficult to hold, heavy as a kettle bell, with bezels the size of interstate freeways. Wrap it in that tire-rubber case, like gloves for handling sulfuric acid, and it tipped about 80 kilograms. Interesting to note, however: the shift over time in default orientation — from portrait to landscape. The iPad originally intended to be a lean back device, demo’d in a Le Corbusier chair, Steve Jobs in repose, reading the gosh-darned New York Times. In clear opposition to a laptop. And now today, used mainly in landscape. With a keyboard attached, only in landscape. Impossible to resist, like fighting gravity itself, the machine became what it was naturally destined to be. ↩︎

-

And also assume that these A-chips will find their way into MacBook Pros within two years time; but for now, are iPad only. But I would not be surprised to see iPads run both iOS and macOS, and switch between OSes when plugged into external monitors, thus fixing the weird UX snafu of touchscreen on a screen without touch. ↩︎

-

Contrary to a lot of complaints I see about iPads, I don’t find the lack of a track pad / pointer to be an issue. Touch / Pencil and a Smart Keyboard have worked really well for me. ↩︎

-

Including Apple! I asked for (the quite excellent) archive of all the data Apple has collected about me (fascinating, well-structured, illuminating, worth retrieving) and, when my archive was ready, upon visiting the archive download page on an iPad was told: This device is not supported. ↩︎

-

An issue of RAM? Fixable by being more swap friendly? Thus entering us into Desktop Rogue Process land? Destroying battery life? Requiring some kind of Activity Monitor? Introducing excessive complexity? But allowing for more flexibility? Impossible to have cake and also eat it? Can cake be cloned? And if so, which cake is the “real” cake? Does the reference cake need to be preserved, in a cake sarcophagus? And if, perchance, eaten, all other cakes lost? ↩︎

-

The obvious way to do this would be to allow for the dragging of any document in the writing app’s document browser to the side of the screen, opening up a second, split-screen view of that document. (Very deliberately avoiding the use of the word “file” here …) ↩︎

-

Other odd text-related details have plagued iOS over the years. This seems to be fixed now, but text keyboard shortcuts didn’t always work as expected. Option-Arrow on an external keyboard is supposed to move the cursor one “word” forward or back. Sometimes it moved the cursor to the start of a word, sometimes it placed it before the space before a word. As the design adage goes: The paint on the back of the fence matters. ↩︎

-

That I am guessing will be rectified in iOS 13? Making external disks real disks, and their file systems visible, and import contexts more flexible … but until then … ↩︎

-

It also doesn’t help that Photos.app is an organizational mess. I’m never entirely sure if I want to tap “Photos” or “Albums,” if I want “All Photos” or “Moments.” One organizes based on date modified, one on date created. Where do videos live? Inline in “Photos” but also in “Albums” but only if you scroll down past all the albums, to a special “Media Types” section. ↩︎

-

My fault for installing Open Office on my mom’s computer ten years ago. ↩︎